USING SVM ALGORITHMS IN BUILDING AN AFFECTIVE COMPUTING SYSTEM

Конференция: LXXXVIII Международная научно-практическая конференция «Научный форум: технические и физико-математические науки»

Секция: Информатика, вычислительная техника и управление

LXXXVIII Международная научно-практическая конференция «Научный форум: технические и физико-математические науки»

USING SVM ALGORITHMS IN BUILDING AN AFFECTIVE COMPUTING SYSTEM

Abstract. Affective computing is a field of study that focuses on developing computational systems capable of understanding, recognizing, and even simulating human emotions. Emotion recognition, a key component of affective computing, involves the identification and analysis of human emotions based on various cues such as facial expressions, voice intonations, and physiological signals.

Keywords: Affective computing, SVM, Machine Learning.

Introduction.

The use of machine learning techniques has revolutionized emotion recognition, enabling computers to interpret and respond to human emotions more accurately. One such powerful algorithm used in machine learning is Support Vector Machines (SVM). In this article, we will explore how SVM can be harnessed to enhance emotion recognition and analysis in affective computing [3].

Understanding Support Vector Machines (SVM) in Machine Learning. SVM is a supervised learning algorithm that can be used for classification and regression tasks [4]. Its primary objective is to find the optimal hyperplane that separates data points of different classes in a high-dimensional feature space. SVM achieves this by maximizing the margin between the hyperplane and the nearest data points, known as support vectors.

One of the key advantages of SVM is its ability to handle high-dimensional data efficiently. It can effectively model complex relationships between features, making it suitable for emotion recognition tasks that often involve multiple modalities such as facial expressions, speech, and physiological signals [1].

SVM also has a solid theoretical foundation, as it is based on the principle of structural risk minimization. This principle aims to find a balance between fitting the training data well and generalizing to unseen data, thus reducing the risk of overfitting or underfitting.

The Role of SVM in Affective Computing

In the field of affective computing, SVM plays a crucial role in emotion recognition and analysis [2]. By utilizing SVM, researchers and developers can build robust models that accurately classify and predict emotions based on various input sources. SVM's ability to handle high-dimensional data and generalize well to unseen examples makes it particularly suitable for emotion recognition tasks.

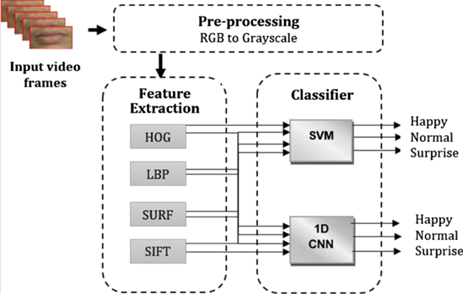

Figure 1. Machine learning algoritm on FER

Compared to other machine learning algorithms such as Neural Networks (NN) or Convolutional Neural Networks (CNN), SVM offers several advantages [4]. Firstly, SVM is computationally efficient, making it feasible to train models on large datasets. This is especially important in affective computing, where datasets like FER2013 containing millions of facial expression images are commonly used [5].

Secondly, SVM provides good interpretability, allowing researchers to understand the decision-making process behind emotion recognition models. This interpretability is crucial in domains where explanations and justifications are required, such as healthcare or human-computer interaction.

Comparison of SVM with Other Machine Learning Algorithms for Emotion Recognition

While SVM has its advantages, it is important to acknowledge that no single machine learning algorithm is universally superior for all tasks [6]. In the context of emotion recognition, different algorithms may excel in different scenarios.

For instance, Neural Networks, especially Convolutional Neural Networks, have demonstrated remarkable performance in image-based emotion recognition tasks. CNNs are particularly effective in capturing spatial patterns and hierarchical features from images, enabling them to extract meaningful representations from facial expression data [7]. However, CNNs often require a large amount of labeled training data and substantial computational resources. In contrast, SVM can achieve comparable performance with smaller datasets and less computation, making it a practical choice in scenarios where resources are limited. Ultimately, the choice of machine learning algorithm for emotion recognition depends on the specific requirements of the application, the available resources, and the desired trade-offs between accuracy, interpretability, and efficiency [8]. Facial expressions are one of the most prominent cues for emotion recognition. SVM can be leveraged to analyze facial expressions and accurately classify emotions based on the visual information they convey. To train SVM models for facial expression analysis, datasets like FER2013 are commonly used. FER2013 is a widely adopted dataset containing over 35,000 grayscale facial images categorized into seven emotion classes: anger, disgust, fear, happiness, sadness, surprise, and neutral [9]. These images provide a diverse set of facial expressions for training and evaluating emotion recognition models.

By extracting relevant facial features, such as facial landmarks or local texture descriptors, and using them as input to SVM, researchers can build models that effectively map facial expressions to corresponding emotions. The trained SVM models can then be used to analyze real-time video streams or images and provide accurate emotion predictions [10].

The FER2013 dataset, introduced earlier, has become a benchmark dataset for facial expression analysis. It consists of three sets: a training set, a public test set, and a private test set [11]. The training set is the largest, containing approximately 28,709 images, while the public and private test sets consist of around 3,589 and 3,589 images, respectively. The FER2013 dataset presents several challenges for training SVM models. One of the main challenges is the class imbalance, where certain emotion classes are significantly underrepresented compared to others[12]. This imbalance can lead to biased models that perform poorly on minority classes. Techniques such as oversampling or undersampling can be employed to address this issue and improve the performance of SVM models. Additionally, the FER2013 dataset contains images with low resolution, occlusions, and variations in lighting conditions. These factors can affect the accuracy of emotion recognition models and pose challenges in real-world scenarios where image quality may vary. Preprocessing techniques like image enhancement, normalization, and data augmentation can be applied to mitigate these challenges and improve the robustness of SVM models [13].

Challenges and Limitations of Using SVM for Affective Computing

While SVM is a powerful tool in affective computing, it is not without its challenges and limitations. One of the main challenges is the selection of appropriate features for emotion recognition. The choice of features greatly impacts the performance of SVM models, and finding the most informative and discriminative features can be a complex task.

Another challenge is the computational complexity of SVM, especially when dealing with large-scale datasets. SVM's training time and memory requirements can increase significantly with the size of the dataset, making it less suitable for real-time or resource-constrained applications.

Furthermore, SVM's performance heavily relies on the proper selection of hyperparameters, such as the kernel function and regularization parameter. Tuning these hyperparameters can be a time-consuming and iterative process, requiring careful experimentation and validation.

Despite these challenges, SVM remains a popular choice for emotion recognition and analysis due to its interpretability, efficiency, and generalization capabilities.

Enhancing SVM Performance with Feature Engineering and Preprocessing Techniques

To improve the performance of SVM models in affective computing, researchers often employ feature engineering and preprocessing techniques.

Feature engineering involves selecting or creating relevant features that effectively capture the characteristics of the data for emotion recognition. In the context of facial expression analysis, features like facial landmarks, texture descriptors, or action units can be extracted and used as input to SVM.

Preprocessing techniques are applied to the data before training SVM models to enhance their robustness and generalization. Techniques such as image enhancement, normalization, noise reduction, and data augmentation can be used to improve the quality and diversity of the training data.

By combining effective feature engineering and preprocessing techniques with SVM, researchers can enhance the performance of emotion recognition models and achieve higher accuracy in affective computing tasks.

The Future of SVM in Emotion Recognition and Analysis

As technology advances and the field of affective computing continues to evolve, the role of SVM in emotion recognition and analysis is expected to expand. One promising direction is the integration of SVM with other machine learning algorithms, such as deep learning techniques. Deep learning models, particularly Convolutional Neural Networks, have shown remarkable performance in various computer vision tasks, including emotion recognition. By combining the strengths of SVM and deep learning, researchers can potentially achieve even higher accuracy and robustness in affective computing. Another area of future development is the exploration of multimodal emotion recognition. Emotions are often expressed through multiple channels, including facial expressions, speech, and physiological signals. SVM's ability to handle high-dimensional data makes it well-suited for combining and analyzing these modalities. By integrating multiple input sources and leveraging SVM's classification capabilities, more comprehensive and accurate emotion recognition systems can be developed.

Conclusion

In conclusion, Support Vector Machines (SVM) offer significant potential for enhancing affective computing, particularly in emotion recognition and analysis. SVM's ability to handle high-dimensional data, generalize well to unseen examples, and provide interpretability makes it a valuable tool in this domain.

While other machine learning algorithms like Neural Networks have their advantages, SVM stands out for its computational efficiency, interpretability, and suitability for resource-constrained scenarios. By utilizing SVM for facial expression analysis and training on datasets like FER2013, researchers can build robust emotion recognition models.

To overcome challenges and enhance SVM performance, feature engineering and preprocessing techniques can be applied. These techniques, combined with SVM's classification capabilities, can lead to more accurate emotion recognition models in affective computing.

As the field of affective computing advances, the integration of SVM with other machine learning algorithms and the exploration of multimodal emotion recognition hold promise for further advancements in this area. By harnessing the potential of SVM, researchers can contribute to the development of more sophisticated and emotionally intelligent computational systems.